Recently, my team and I had the opportunity to upgrade a client’s database system from MariaDB Server 10.2 to 10.4. The client is running a legacy application and has been hesitant to embrace the latest version. However, after careful consideration and testing, we decided it was time to take the leap and introduce the upgraded MariaDB Server to their environment.

We meticulously set up a test system, ensuring that all configurations and data were mirrored from the production environment. The initial tests went smoothly, and everything seemed to check out. Encouraged by the successful trial, we proceeded with the upgrade across the entire production setup.

For the first few months, the upgraded database performed admirably, meeting the client’s expectations. However, after approximately three months, we began encountering a perplexing issue: a specific but infrequently used query started locking up the database, significantly impacting the system’s overall performance. What used to be a swift operation, taking mere seconds, now lingered for minutes, causing frustration and impeding critical business processes.

The problem: Optimizer Switch Changes

Too Many Rows?

Our initial hypothesis was that the increase in data volume might be responsible for the degradation in performance. One of the key tables had grown from 200 million rows to over 300 million rows since the upgrade. We suspected a threshold effect.

A threshold effect refers to a situation where a specific condition or factor reaches a certain threshold, causing a noticeable change in the query’s performance characteristics. This effect is often observed when the data volume or system resources exceed a particular threshold, such as requiring disk-based tables, leading to a sudden degradation in query execution time or overall system responsiveness.

To validate this assumption, we conducted tests on a separate MariaDB Server with significantly more resources. We learned that they query was CPU-bound and despite using a CPU with nearly 4x the power, the query was still over 20X slower than before.

Time to Downgrade?

Digging deeper, we decided to test the current data on a MariaDB server running version 10.2. Surprisingly, the query performed just as well as before, further debunking our theory that the data size was the root cause of the issue.

This was perplexing as upgrading is supposed to improve performance. So we had to dig into the upgrade notes.

Optimizer Switches

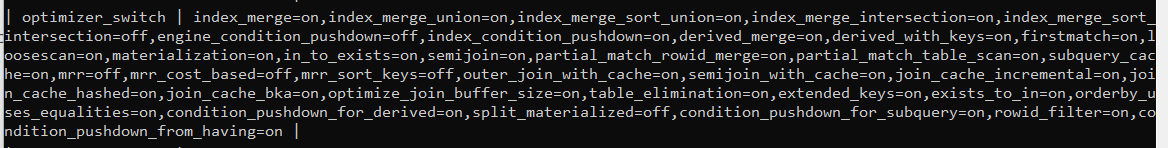

In the 10.4 version, MariaDB had implemented some alterations to the default behavior of optimizer switches, which affected the query planning and execution. These modifications aimed to improve performance for certain scenarios, but in our case, they had an adverse effect on the query in question.

Realizing the significance of these changes, we began experimenting with different optimizer flags to find the optimal configuration for our client’s workload. We consulted the MariaDB documentation. Specifically, a list of changes of default optimizer switch settings in various version of MariaDB.

Through incremental adjustments and meticulous benchmarking, we finally found a combination of optimizer flags that addressed the performance degradation issue. By reverting certain flags back to their 10.2 defaults and adjusting others to align with the specific workload characteristics, we were able to restore the query’s swift execution, completing it within the expected time frame.

Query Optimization is Hard

This experience served as a powerful reminder of the intricate nature of query optimization and the profound impact that seemingly minor changes in optimizer flags can have on overall performance. It reinforced the importance of thorough testing, both during the initial upgrade process and in the long run, to uncover any unexpected consequences and mitigate potential performance bottlenecks.

The 10.4 upgrade had improved about 15 different queries. They went from taking 2-3 seconds to sub-1 second — which met our client’s performance objectives. However, this one query had went from taking 8 seconds to over 30 minutes.

In this case, we updated the my.cnf configuration with:

optimizer_switch = 'split_materialized=off'

This resolved the issue. In their next code update, they will pass this value in their code for the impacted query. This will leave the other queries to benefit from the upgrade.

Lessons Learned

In the end, our client was relieved to see their database system operating smoothly once again. This incident also deepened our understanding of MariaDB Server’s optimizer flags and sharpened our skills in optimizing query performance in complex environments. It reminded us of the ever-evolving nature of database technologies and the need for constant vigilance when managing critical systems.

As we continue to support our clients’ database infrastructures, we carry this valuable experience with us, ensuring that we stay abreast of the latest developments and provide the most efficient solutions for their evolving needs.